Why Open Hardware Still Matters

The Open Compute Project (OCP) was founded in 2011 to give hardware the same freedom that open-source software gave operating systems. It breaks vendor lock-in, speeds innovation, and cuts wasted watts and dollars. Thirteen years later, the results are visible well beyond hyperscalers. Tier-2 colocations, enterprises, and edge sites now adopt OCP gear because its open specs lower cost and ease multi-vendor sourcing. They also expose clear interfaces for serviceability and monitoring.

Data center technologies are the backbone of modern society, providing the foundation for artificial intelligence (AI) development, cloud solutions, and internet services we all rely on. However, despite their critical importance, the hardware that powers these data centers has historically been proprietary in nature. This has resulted in excessive costs for customers, as well as a lack of flexibility and innovation in the development of new technologies.

What is the Open Compute Project?

The Open Compute Project (OCP) is a movement that has changed the way data centers are designed and constructed. Facebook (now Meta) created OCP to escape proprietary hardware. The team needed freedom from closed solutions and the limits of legacy enterprise data centers.

The idea behind OCP is simple, to create an open standard for computing that makes all aspects of a modern data center open. This includes rack sizes, connector types, encasements, and power systems, allowing for data centers to be constructed from parts sourced from different suppliers. No single supplier locks customers into an ecosystem, and that freedom is a major advantage. Users can choose the best components without tying themselves to any brand or technology.

The OCP’s Modular Design Philosophy

One focus for OCP is modular designs capable of expansion, growth, and upgradability. OCP spans servers, accelerators, racks, power units, storage, and network switches. Each module can be expanded, upgraded, or replaced as needs evolve. This flexibility allows data centers to adapt to changing demands and technologies over time, reducing the need for costly upgrades and minimizing downtime.

OCP’s open-source model invites industry, researchers, and the community to co-develop innovative technologies. Their contributions easily integrate into OCP data centers. The OCP also helps to create a community-driven approach, with users and engineers working together to improve and refine the standard over time.

As hardware becomes more modular and customizable, the supporting power infrastructure must evolve just as rapidly.

The Role of Power Supplies in the OCP

In hyperscale infrastructure, attention often centers on computing performance, quantified by floating point operations per second (FLOPs), or by storage capacity in petabytes. However, neither metric holds value without reliable, efficient power delivery. In OCP environments, the power infrastructure must meet modern high-density demands. It delivers top performance, energy efficiency, and cost optimization at scale.

Key performance metrics for modular power supplies in OCP hardware include:

- High efficiency: Minimizes energy loss, thermal output, and cooling demand.

- Compact form factors: Supports high-density data center racks.

- Hot-swappable: Scalable architecture sustains uptime and facilitates upgrades.

These open hardware-based power systems are foundational to modern data center power strategies. Even small gains in power conversion efficiency can yield large reductions in total energy consumption, operational overhead, and carbon footprint. Small efficiency improvements scale significantly at hyperscale, reducing energy use and operational costs.

Improved power efficiency directly supports higher power density; the ability to deliver more wattage in less physical space. This is essential as next-generation computing workloads increasingly rely on AI accelerators, GPUs, and custom silicon, all of which elevate rack-level power demands.

Open Rack Voltage Specification

The most visible sign of OCP’s “open” philosophy lives in the rack itself. Each Open Rack (OR) release tightly defines the bus‑bar voltages, dictating how power shelves and downstream converters must behave.

OR V2 (2017) shipped with two parallel buses. A 12 V rail kept older systems happy, while a 48 V “high-voltage class” rail met emerging efficiency targets. That dual-rail compromise, however, meant extra copper, thicker conductors, and twice the downstream conversion hardware.

OR V3 (2022) finishes the migration. It eliminates the 12 V backbone entirely and standardizes the rack on a single 48 V class bus. The nominal set-point is tightened to 51 V (or 54 V in transition racks), and the allowable window narrows to 46 ~ 52 V (or 52 ~ 56 V).

| Spec | Bus Option(s) | Shelf Set‑Point | Bus Voltage Window | Notes |

|---|---|---|---|---|

| OR V2 (2017) | 12 V legacy | 12.5 V ± 0.1 V | 12.2 V ± 0.4 V | Mandatory for any point measured on the bar |

| OR V2 (2017) | 48 V class | 54.5 V | 40 V ~ 59.5 V | Stays below the 60 V Safe Extra‑Low Voltage (SELV) limit |

| OR V3 (2022) | High‑voltage only | 51 V or 54 V | 46 ~ 52 V or 52 ~ 56 V | Dual set‑points allow backward compatibility as the ecosystem transitions |

Table 1: Comparison of OR V2 and OR V3 electrical requirements

By eliminating the 12 V rail, V3 cuts copper losses, simplifies interconnect hardware, and aligns new OCP racks with the rising current demands of modern processors and accelerators. It also aligns with fixed-ratio 48 V-to-POL converters now common on AI accelerators and high-core-count CPUs.

In all versions, regulated DC is carried on blind-mate copper busbars that span the full rack height, enabling hot-swappable power shelves and IT gear without manual cabling.

Together, these electrical standards and mechanical modularity create a resilient foundation for scalable, efficient power delivery in OCP ecosystems. These standards prepare the ground for fault isolation, telemetry, and hot-swap integration. Modern front-end power supplies address these needs.

Power Challenges in Hyperscale Environments

As AI accelerators push rack loads toward, and in some deployments, beyond 100 kW, three constraints dominate the power‑delivery conversation:

- Copper losses: Even at 48 ~ 54 V, resistive drop across vertical bars can waste >1 kW per rack if not engineered carefully. OR V3 mitigates this with thicker cross‑sections and shorter bar pairs.

- Thermal head‑room: High inlet temperatures plus liquid‑cooled cold plates require PSUs to maintain full output at 45°C ambient with 20% derating head‑room.

- Monitoring granularity: Fleet operators now demand per‑shelf energy‑per‑compute metrics; hence the mandatory PMBus/CAN hooks.

To meet these expectations, OCP-compliant power shelves incorporate intelligent telemetry to support high-performance operation. These shelves use Power Management Bus (PMBus), Controller Area Network (CAN), and Ethernet for telemetry. The protocols provide real-time monitoring of voltage, current, temperature, and fault conditions. This visibility enables predictive maintenance, dynamic load balancing, and proactive fault mitigation, minimizing downtime and optimizing power delivery across compute intensive workloads.

In addition, features such as inrush current limiting, voltage sequencing, and hot-swap interlocks protect both individual modules and upstream equipment during live insertion or removal. Combined with mechanical standardization, these capabilities support efficient upgrades, reduce total cost of ownership, and maximize uptime in high-density computing environments.

Design Expectations for Modern Power Supplies

To remain competitive with proprietary systems, OCP compliant designs must meet rigorous performance, efficiency, and integration demands. AI, machine learning (ML), and edge processing are reshaping computing environments. Power supplies must therefore deliver more performance in less space while preserving reliability and interoperability.

A compliant OCP power shelf must:

- Deliver the correct bus voltage at its output bolts (see table 1), compensating for IR drop so that on‑bar measurements remain within spec.

- Provide hot‑swap capability via blind‑mate connectors and internal OR‑specified ORing/disconnect circuitry.

- Expose telemetry and control over the Power‑Management Bus (PMBus) and optional Controller Area Network (CAN) interfaces for rack‑level orchestration.

Efficiency has become a core specification for data center power systems. These demands push power-supply makers to increase density. Some front-end units now exceed 100 W/in³ while still converting power at >96% efficiency across typical loads. Voltage regulators must deliver over 94% efficiency at thermal design power (TDP) for 1.8 V outputs, and above 89% efficiency for rails at or below 1 V.

The standards help engineers cut thermal load per watt and align power modules with airflow. They also maximize compute density within each rack unit. In hyperscale environments, even a one-percent increase in power supply efficiency can yield substantial energy and cost savings across large deployments.

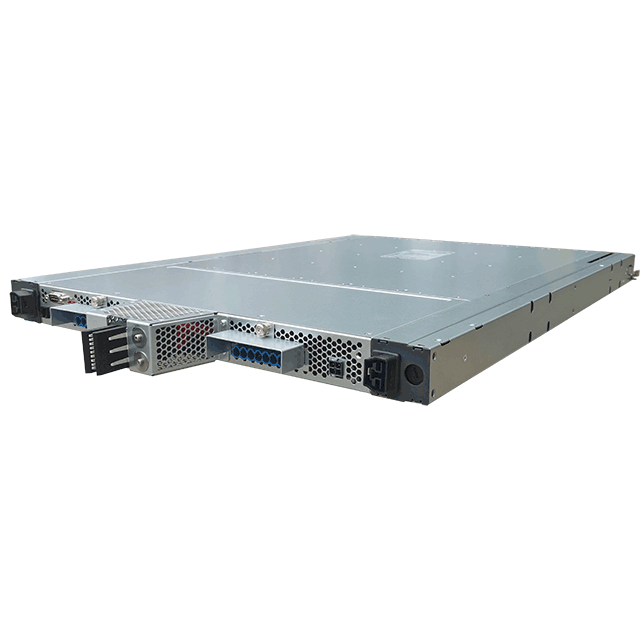

Form Factor and Modularity in OCP Power Design

Beyond electrical performance, OCP power supply design is deeply rooted in mechanical standardization. A foundational element of this modular approach is the Open Unit (OU), a physical unit of rack height defined as 48 mm (about 1.89 in). Most power supplies and power shelves are designed in 1 OU or 2 OU formats to align with these standards.

These modular units are front-mounted within racks and interface with common busbars using blind-mate connectors. This architecture supports both shallow (660 mm) and deep (800 mm) racks, making it highly adaptable across enterprise, cloud, and edge deployments.

Hot-swap functionality is built into the physical design, enabling service without disconnection or system downtime. Additionally, airflow is managed through standardized intake and exhaust paths to align with system-level thermal zones.

This level of mechanical interoperability reduces complexity, supports vendor-agnostic integration, and enables efficient upgrades or replacements without altering upstream compute or storage payloads.

The Future of Open-Source Power Supply Designs

Cloud, AI, and ML workloads are pushing rack power budgets well beyond 100 kW, making high-density, high-efficiency delivery essential. The Open Compute Project (OCP) tackles this demand with open, standards-based power shelves that any qualified vendor can populate with swappable modules.

Because mechanical and electrical interfaces are public, a supplier can release a modern Silicon Carbide (SiC) or Gallium Nitride (GaN) based module today, and operators can slot it into existing OCP racks tomorrow, no redesign, no proprietary lock-in. The open model speeds adoption of new topologies. It also boosts competition and keeps costs in check.

Enabling Rapid Prototyping for Custom Power Solutions

Open hardware also invites end users to contribute improvements. Enterprises can prototype custom supervisory firmware, advanced telemetry, or thermal enhancements and share them back to the community. The result is a continuous innovation loop that strengthens supply-chain resilience, shortens design-to-deployment cycles, and improves total cost of ownership.

As power envelopes rise, OCP’s open-source model will stay a key engine for efficiency, interoperability, and rapid rollout. Proprietary ecosystems struggle to match these advantages.

Bel Fuse equips OCP-aligned infrastructures with modular, high-efficiency power solutions engineered for next-generation data-center performance. Explore our OCP-compliant power shelves and download the full specifications.

.png)