The rapid rise of AI is transforming modern industries, but behind this breakthrough lies an overlooked challenge—escalating energy consumption. AI models, especially those powering Large Language Models and deep learning applications, such as ChatGPT, require immense computational resources, putting unprecedented strain on data center power infrastructures. This article will delve into the unseen costs of artificial intelligence, examining power demands, sustainability concerns, and the solutions driving greater efficiency

AI Power Demand and Energy Consumption Challenges

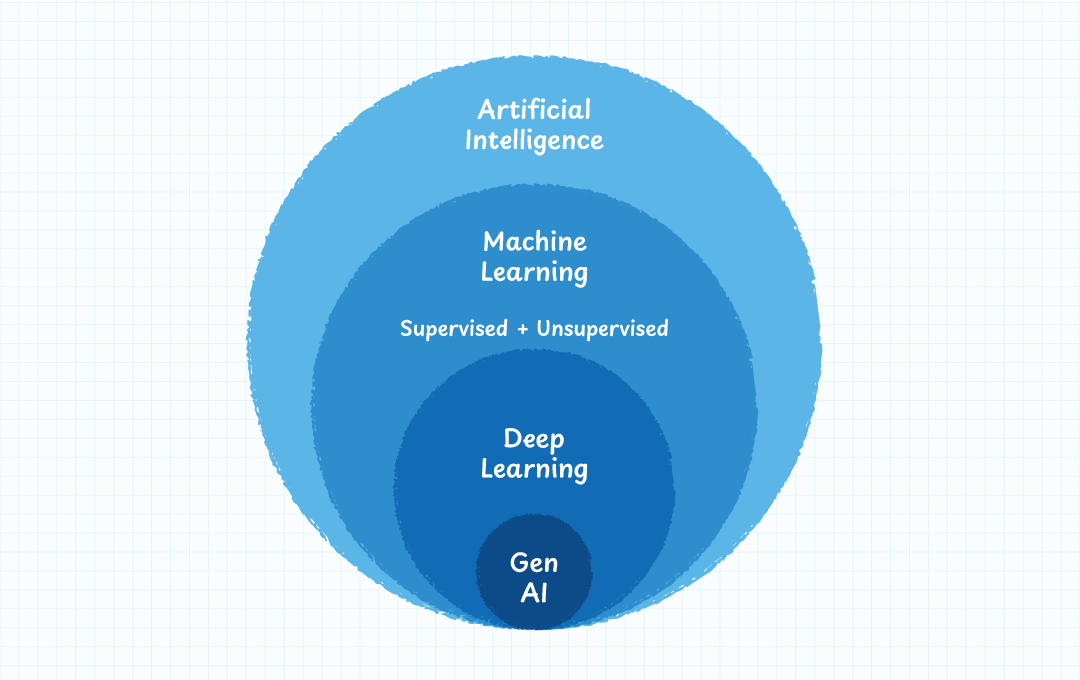

Creating AI models capable of speech recognition, problem solving, and reasoning requires enormous amounts of data and training times that can span weeks, months, and in some cases, years. Such large data sets are necessary to train the AI model with as many examples as possible to ensure accuracy, while the training time stems from the sheer size of the models and the calculations used to adjust their weights.

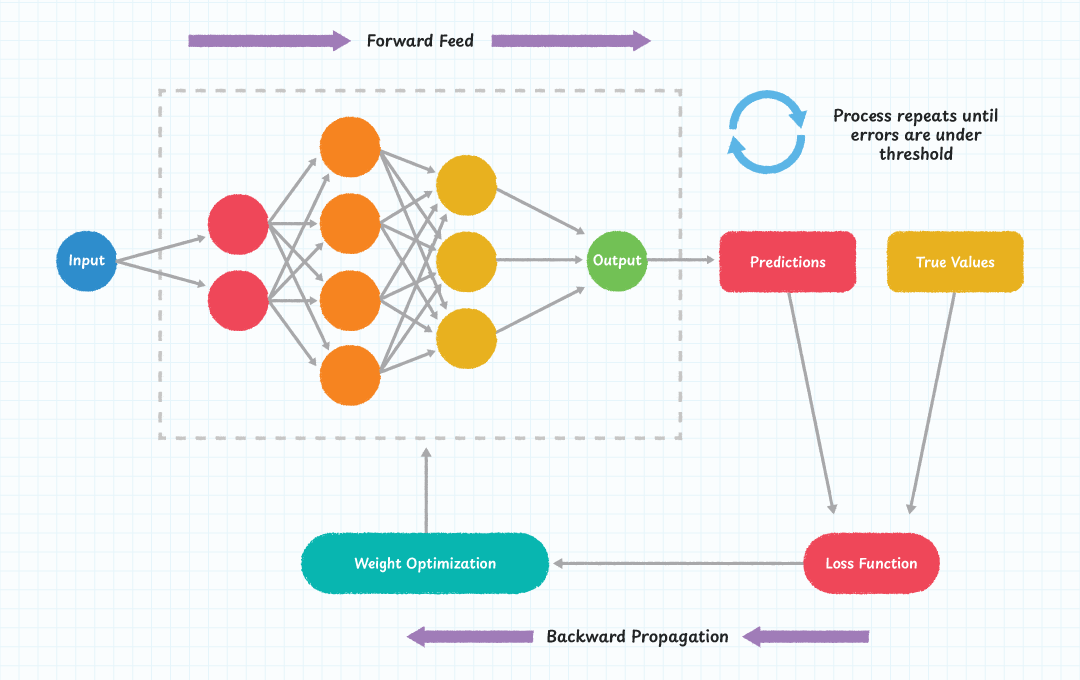

During the training process, neural nets (the brain of the AI) need to perform forward inference, result checking, and backward adjustments. This iterative process is repeated multiple times across massive datasets. The algorithms employed in training are heavily reliant on matrix and vector operations, which is why GPUs are the preferred choice over CPUs. While this method for training works, it comes at a serious energy cost.

Sustained Power Draw After Model Deployment

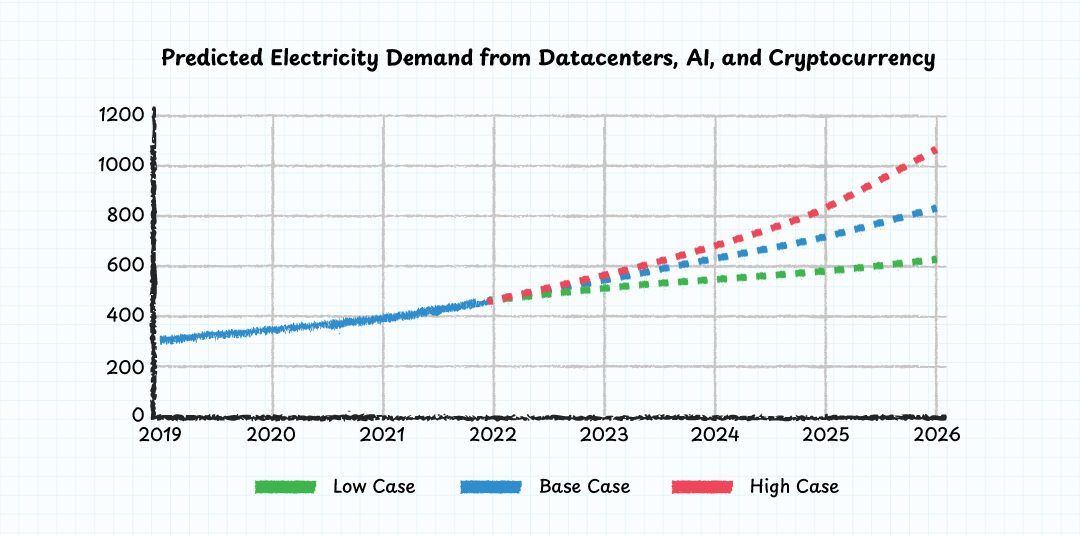

But even when a model is trained, the energy demands do not dissipate, as running the model to serve millions of clients consumes a considerable amount of energy. The cumulative effect of the energy requirements for both training and inference is that AI is quickly become a significant driver of energy demand from data centers. In some unique cases, companies are exploring nuclear energy as a potential solution to meet the growing energy needs of such data centers.

Environmental Impact of AI Energy Use

The energy requirements of modern AI systems are nothing short of staggering, with some reports suggesting that the energy footprint of training modern AI language models can produce in excess of 8000 tons of CO2. Such immense energy consumption impacts every aspect of data centers, requiring engineers to carefully consider numerous factors when designing and implementing these systems.

Arguably the biggest challenge faced by AI service providers is the distribution and conversion of energy. The sheer scale of modern AI systems requires massive amounts of power, which can be especially difficult to deliver to remote data centers.

Delivering Scalable Power to High-Density AI Systems

Furthermore, the high-power density of modern GPUs, combined with their large size, can make it challenging to dissipate heat efficiently, leading to issues such as GPU failure and system downtime (this has already been a problem with ChatGPTs launch of image generation, which saw GPUs literally melting). This can be temporarily solved with power throttling, but doing so can significantly impact the performance of AI services, and thus, drive down profit.

However, the power requirements of GPUs are not the only challenge currently facing AI systems—existing power distribution systems are also under immense strain. As GPUs grow in size and complexity, efficiently delivering power through traditional systems becomes increasingly challenging. The need for high-speed memory in modern AI models further amplifies power consumption, compounding the difficulty of managing energy distribution effectively.

Improving Power Supply Efficiency for AI Systems

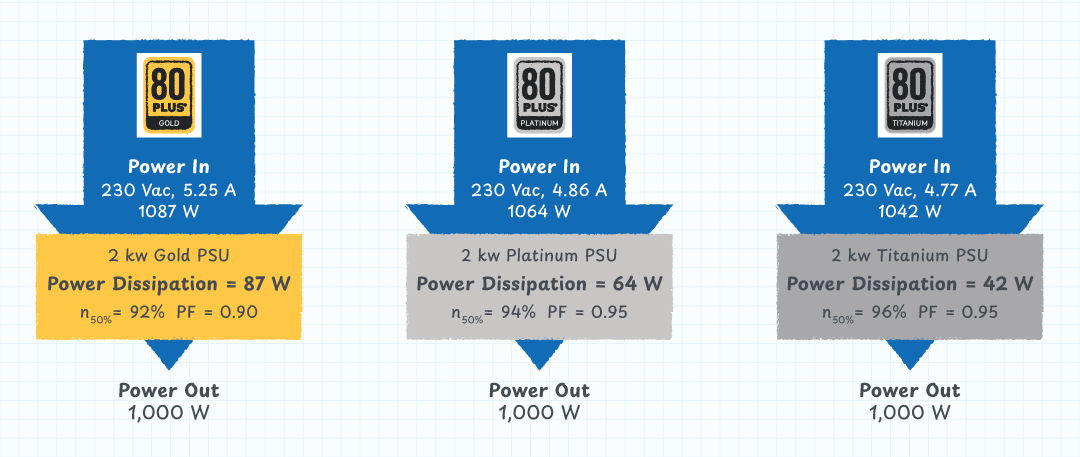

Improving energy efficiency in AI training is a complex challenge that can be approached from numerous vectors. One such approach is to simplify AI models, reducing their complexity and size while maintaining their performance, with such techniques including the pruning of unnecessary layers, compressing weights (bit reduction), and employing knowledge distillation techniques. However, when it comes to power supply efficiency, there are a number of different techniques that engineers can deploy to reduce losses as much as possible.

One such technique is to use high-frequency switching converters, which can reduce energy losses associated with inductive elements and capacitors (this is where emerging technologies like SiC and GaN also come into the picture). Another technique is to implement advanced power management ICs that can optimize energy conversion and minimize losses.

When dealing with power demands as high as those present in AI systems, even a one percent increase in efficiency can have a major impact. By reducing energy wastage during power conversion, the energy density of the power supply is increased, allowing for more power to be delivered to the connected system.

Thermal Management and GPU Stress

This larger power delivery capability enables the use of more powerful processing units which can handle complex AI models more efficiently. Additionally, the reduction in energy losses also results in lower thermal losses, which allows the system to operate at higher powers for a longer duration. This can be particularly beneficial in data centers where the energy efficiency of the entire system is critical for reducing operational costs and environmental impact.

The ability to maintain the thermal properties of a server while increasing the power also means that preexisting thermal management systems do not need adjusting. This is especially important for data centers where AC units are fixed and difficult or expensive to replace. As such, greater efficiency in power supplies directly results in the ability to use more powerful hardware, all while leaving existing infrastructure in place.

The Future of Sustainable AI Processing

The integration of AI into various sectors of society has proven to have many benefits, including its ability to streamline complex, language-based tasks. However, the implementation of AI on a large scale is not without challenges, the most significant hurdle being its high energy demand.

The computational power required to run AI models is growing exponentially, which poses a problem both for the power grid and for the infrastructure supporting these systems.

Infrastructure and Grid Limitations

On a macro level, the electrical grid in many regions is simply not equipped to handle the additional load imposed by widespread AI deployment. On a micro level, individual server racks housing GPUs and other hardware face their own issues.

Such components require significant amounts of power to function effectively, while the thermal management of these systems is always a central concern, as excessive heat can lead to hardware failures and reduced performance.

Power Supply Innovation: The Path to Sustainable AI

One promising solution to these energy-related challenges lies in the development and deployment of advanced front-end power supplies. By using power supplies that take advantage of the latest technologies (e.g. SiC and GaN) in combination with highly efficient typologies, the overall efficiency of servers can be increased, allowing for greater processing powers while maintaining current thermal management systems.

Explore Bel’s energy-efficient power supplies and start building smarter infrastructure today.